In the clean, ordered world of a CAD tool, a high-speed circuit design exists as a perfect abstraction. Traces are ideal conductors, layers are perfectly aligned, and performance meets the precise predictions of a simulation. The gap between this digital blueprint and a physical board that can be reliably manufactured by the thousands, however, is where disciplined engineering truly begins. This is the domain of Design for Manufacturability (DFM), a practice less about adding costly features and more about developing an intuition for the physical world.

Effective design on a constrained budget is an exercise in deliberate trade-offs. It means favoring the known quantities of high-volume materials like FR-4 and the predictable processes of a 4 or 6-layer build. It recognizes that intelligent routing is free, while manufacturing steps like via-in-pad filling or back-drilling carry a real cost. The objective is not perfection, but a robust and repeatable product. It is about knowing when a looser impedance tolerance of ±10% is sufficient for the system, saving the fabricator from chasing a needlessly tight ±5% target. This is the wisdom that prevents costly errors and ensures a design survives its journey from screen to reality.

The Contract of Creation: Defining the Layer Stackup

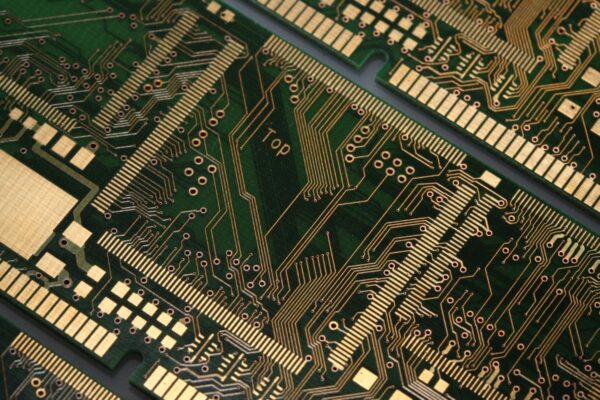

The layer stackup document is the single most important contract between a designer and a fabricator. It is the definitive recipe, and any ambiguity within it is an invitation for assumptions. Those assumptions, made by a fabricator trying to interpret an incomplete instruction set, are the primary cause of impedance mismatches and inconsistent performance between production runs.

A truly manufacturable stackup leaves no room for interpretation. It must be an exhaustive document, specifying the layer number, its type, the exact material like Isola 370HR, not a generic “FR-4 equivalent,” and the material’s dielectric constant (Dk). The precise thickness of every copper and dielectric layer, alongside the copper weight, must be called out. This level of detail feels pedantic until you consider the physics. Different “FR-4” substrates possess varying Dk values that can dramatically alter the final impedance of a trace, turning a functional prototype into a field failure.

From this foundation, the specification for controlled impedance naturally follows. Simulation is only a starting point. To ensure the physical board matches your intent, the fabrication notes must contain explicit, manufacturable instructions. You must clearly state the target impedance and its tolerance, like 90Ω ±10% differential, and identify the specific layers and trace widths the rule applies to.

Then comes the crucial statement, the one that bridges the gap between your design and the fabricator’s process: “Fabricator to adjust trace/space and dielectric thickness to meet impedance target. Final stackup requires approval.” This single line is non-negotiable. It empowers the fabricator to use their specific materials and process window to achieve your electrical goal, while giving you the final sign-off on the physical construction. It transforms the relationship from one of dictation to one of collaboration.

And what of the final copper surface? At frequencies pushing past 10 GHz, the skin effect forces the signal to the trace’s surface, making the finish a relevant factor. A finish like ENIG introduces a resistive layer of nickel that can increase insertion loss. For these demanding applications, OSP can offer a cleaner signal path. Yet, this is a classic engineering trade-off. ENIG is exceptionally durable, while OSP has a shorter shelf life and handles multiple reflow cycles poorly. For the vast majority of digital high-speed designs, the process reliability of ENIG makes it the pragmatic and entirely acceptable choice.

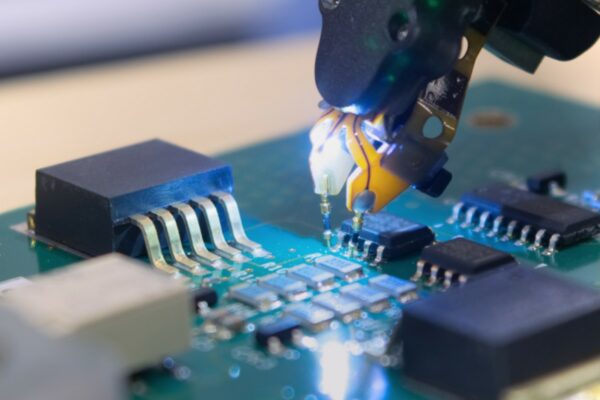

The final proof of this contract is the impedance test coupon. It is not an optional add-on but the physical evidence that the board in your hands meets the specification. Built on the same panel using the exact same process, the coupon is measured with a Time Domain Reflectometer, and the resulting report is your guarantee. Without it, you are simply trusting that everything went according to plan. The coupon is the difference between assuming your board is correct and knowing it is.

The Vertical Path: Where Density and Risk Collide

The choice of via technology is a direct negotiation between routing density, manufacturing cost, and process risk. Standard vias are the workhorse. They are the cheapest, most reliable, and should be the default wherever board space allows. Their manufacturability is unmatched.

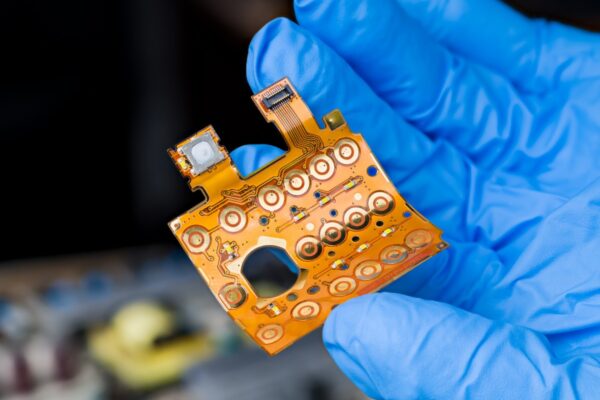

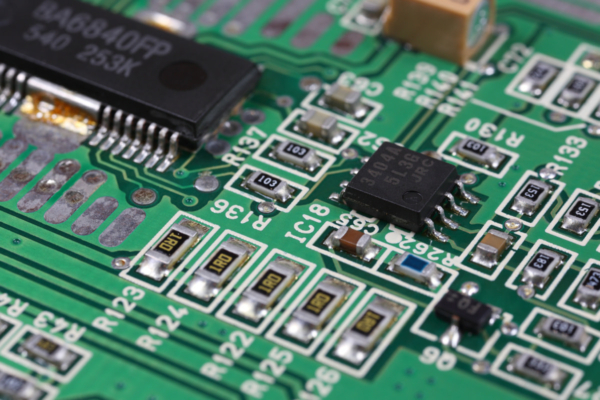

The push for density, however, often leads to via-in-pad, a technique essential for fanning out modern high-pin-count BGAs. It solves a routing problem but introduces a critical manufacturing requirement. The via barrel, now sitting directly in a component’s solder pad, must be filled with non-conductive epoxy and plated perfectly flat. This adds a tangible 10-15% to the board cost and, more importantly, represents a critical instruction that cannot be missed.

For the most extreme density challenges, such as routing 0.5mm pitch BGAs, designers must turn to laser-drilled microvias. This decision moves the board into an entirely different class of manufacturing known as high-density interconnect (HDI), which involves sequential lamination and can easily increase board cost by 50% to 200%. It is a solution born of necessity, to be used only when routing is physically impossible by other means.

It is within this world of vias that the most common and catastrophic DFM failure occurs. An engineer, seeking density, uses via-in-pad but fails to specify “filled and plated over” in the fabrication notes. In the CAD tool, the BGA fanout looks clean. On the assembly line, a different story unfolds. During reflow, the unfilled via barrel acts like a tiny straw. Molten solder from the BGA ball is wicked down into the via by capillary action, starving the joint. The result is a weak connection or a complete open circuit, a latent defect that may only appear after months of thermal cycling in the field. It is a catastrophic failure, born from a single missing line in a fabrication document.

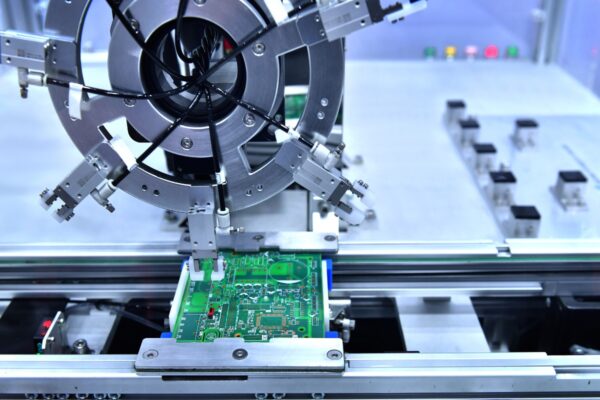

The Final Test: Assembly and the Physical Board

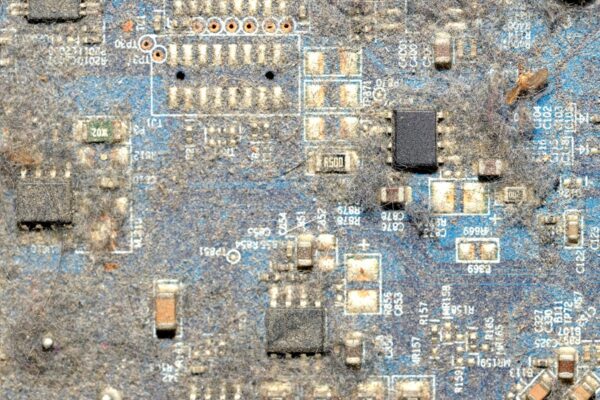

A design’s journey does not end at fabrication. The board must survive the trial by fire of the assembly line, and a layout that is difficult to assemble will be impossible to produce reliably at scale.

Component placement has a direct impact on soldering yield. Similar parts, especially polarized components like diodes, should be oriented in the same direction to simplify automated and manual inspection. A minimum spacing of 20 mils between small passives is needed to prevent solder bridging. For larger components like BGAs, a clearance of 3-5mm is not a luxury; it is a requirement for rework tools and test socket latches.

The board itself has a physical presence. A design that clusters all heavy components on one side creates an imbalanced thermal mass, which can cause the board to warp in the reflow oven. Small components should never be placed in the thermal “shadow” of taller parts, which can block the flow of hot air and lead to an incomplete solder joint.

This physical reality becomes most apparent during panelization, the process of arranging boards into a larger array for efficient production. A poorly designed panel can destroy yield. The frame must be rigid enough to prevent the array from sagging under its own weight in the reflow oven, a primary cause of fractured BGA joints. Breakaway methods matter. V-scoring provides clean edges, while “mouse bites” must be placed where their remaining stubs will not interfere with the final product enclosure. And on this panel, fiducial marks serve as the critical reference points, with global marks for the entire array and local fiducials near any fine-pitch component, ensuring the placement machine knows precisely where to go. This is the final translation of digital intent into a physical, repeatable, and ultimately successful product.